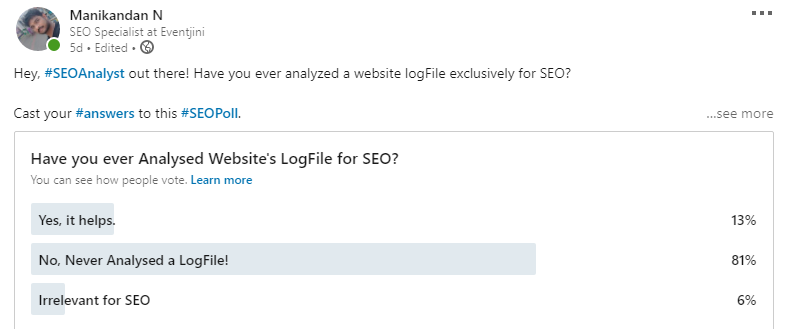

I have recently conducted a #SEOPoll on LinkedIn regards log file analysis for SEO. The question is simple, “Have you ever Analysed Website LogFile for SEO?”

A sum of 48 people titled with Digital marketer, SEO specialist, and SEO manager answered the poll.

The result was very shocking and stood an inspiration to this post.

Guess what, 81% of digital marketers & SEO specialist never analyzed a log file!

Real-time Problem

What if I told you, “The fundamental job of an SEO Analyst is neither ranking a website nor bringing organic traffic“?

Ranking & organic traffic are 2nd priority!

Agree or not?

The primary task of an SEO analyst is to make sure the website or all the potential pages of the site is crawlable & indexable by the search engines.

To ensure that we go to Google Search Console, “Legacy Tools and Reports” to data analyze daily crawl-ability stats by the search engines.

Must read 👉 “Guide to Export 25,000 rows using GSC API without coding!!!”

Based on the above Crawl Stats of the Googlebot activity on my website, the peak shows that there are 537 pages crawled in a day while the lowest crawl happened for 13 pages.

What’s the big deal? That’s how the Google crawl happens, isn’t it?

Agree that, but why Googlebot crawls 500+ pages while my website has only 90 URLs that are submitted on Sitemap (including posts, pages, and media attachments).

Isn’t it a waste of the crawl budget and bandwidth of the website?

Yes, it’s a waste of both.

Hence, as SEO Analyst, we have to identify what goes wrong with the crawl budget to use the website’s bandwidth efficiently.

Importance of Log File Analysis for SEO

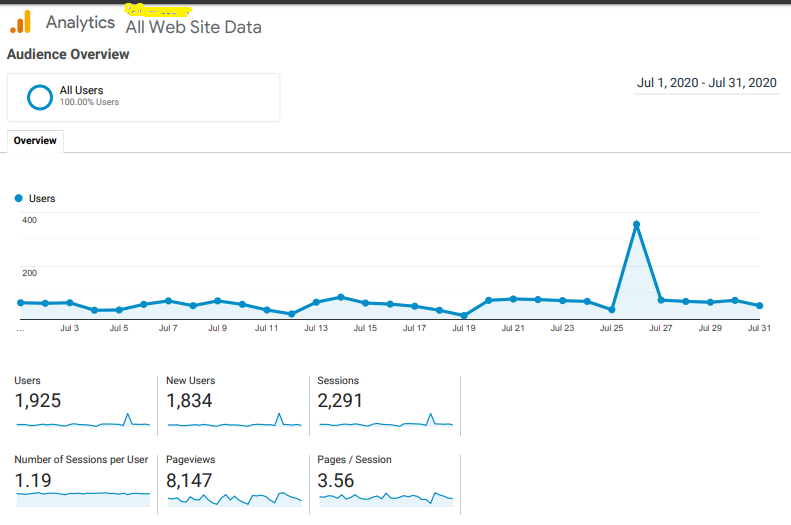

To find more insights, I moved to Google Analytics.

Google Analytics is one of the free SEO tools for digital marketers to understand user behavior.

We, “Digital marketers blindly believe the data available in Google Analytics without any second thought.”

Then I found, Google analytics data isn’t 100% accurate!

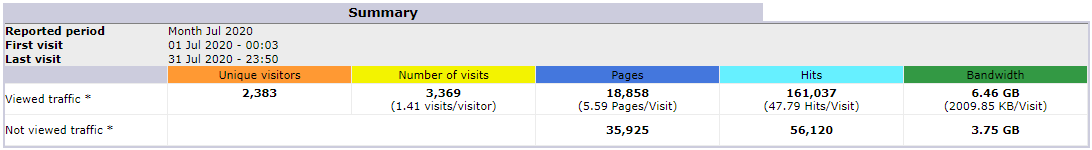

These are the traffic stats available with Google Analytics for July 2020 and data downloaded from the server log file for the same period.

There is an apparent mismatch in the number of visitors and pageviews, which consequently proves that Google Analytics data isn’t 100% accurate.

The difference is simply because the Google Analytics code made for the “client-side data,” which will resemble data only when there is a valid trigger to it.

The Log file will give you the “server-side data.” The server-side data is 100% accurate. It is powerful to record every activity of the website for 24 hours a day, 7 days a week, and 365 days a year without any break!

That’s how helpful is the Server Log File Analysis for Digital Marketers.

What is Log File in SEO?

Technically, every time a user or a crawler visits a website, a cluster of recordings entered into the server. The file that collects this information is called the Log File. Log files exist for example for technical auditing, error handling, and troubleshooting

A log file will consist of the following things:

- Client IP – xxx.7x.xx6

- Timestamp – [01/Aug/2020:08:40:06 -0400]

- Method (GET/POST)

- Requested URL – /business/

- HTTP Status Code – HTTP/1.1″ 200

- Browser User-Agent – “Mozilla/5.0 (compatible; Googlebot/2.1)

A sample Log file with Googlebot as User-Agent

66.xxx.7x.xx6 – – [01/Aug/2020:08:40:06 -0400] “GET /business/ HTTP/1.1” 200 9415 “-” “Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)”

As a digital marketer/SEO analyst, you will get to know the following details with the log file.

- Actual bot crawl volume

- Response code errors

- Bot crawl priority

- Last crawl date

- Temporary redirects

- Crawl budget waste

Issues with Getting a Log File for SEO Audit

Downloading a log file from the server isn’t that easy. There are plenty of real-time challenges associated with it.

- As there is a Personally Identifiable Information (PII) thing like IP address available with the Log file, you need a lot of permission to download them unless you own the website.

- Logfile comes in 8 major file formats, which makes the analyst work differently based on each form.

- The file size is another issue. An increase in the date for analysis, bigger is the log file size.

Benefits of Log File Analysis for SEO in 2022

The log file analysis for SEO brings you the following benefits for the digital marketing actions:

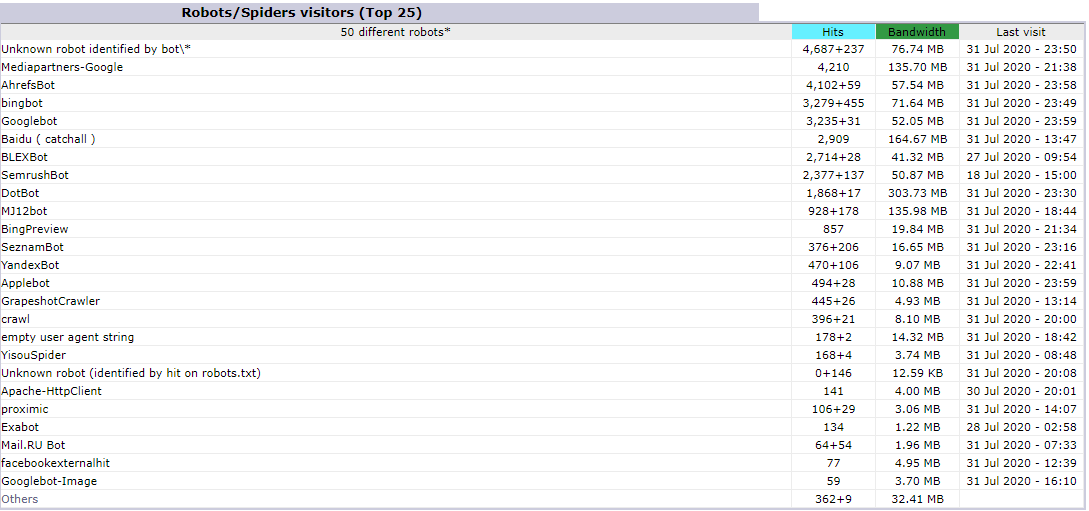

- We can find all the robot activities on the website and block the unnecessary robots to save the bandwidth using robots.txt file.

- It helps in identifying the Spam IP (make sure you adhere to the guidelines like General Data Protection Regulation (GDPR) before collecting them for market analysis) that visits your website frequently just to increase the bounce rate.

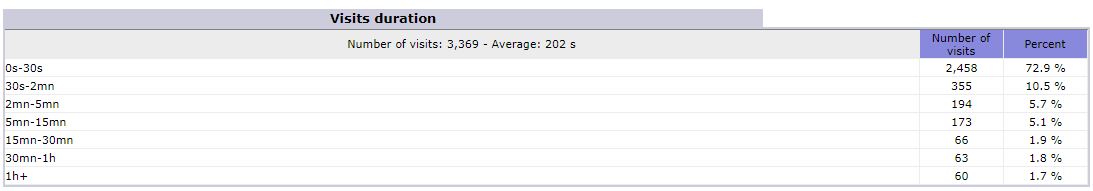

- Get insights on your user “Visits Duration” for marketing & research, which isn’t accurate with Google Analytics.

Remember: Single-page sessions have a session duration of 0 seconds. It won’t help with digital marketing campaign analysis.

- You will get 100% accurate data on “Entry & Exit” pages for every URL visited.

- It helps in finding more granular information on the browser, device, and operating system to target & tune the digital marketing campaign accordingly.

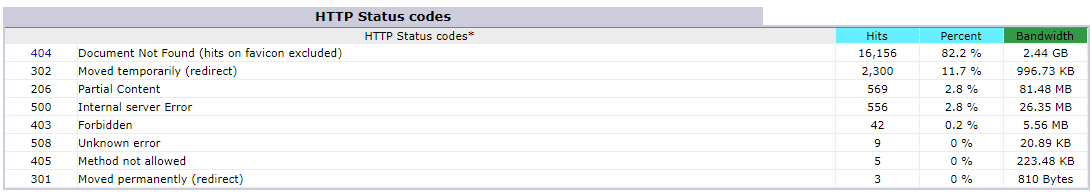

- You will get the complete “HTTP Status Codes” information to find errors and redirects.

Log File Analysis SEO Report

You will get to know the following data,

- Actual bot crawl volume

- Response code errors

- Bot crawl priority

- Last crawl date

- Temporary redirects

- Crawl budget waste

Analysing Actual Crawl Bot Volume:

Crawl Budget in SEO

Crawl budget is an estimated number of pages that a specific search engine robot can crawl within 24 hours.

The crawl budget should efficiently utilize to crawl all the essential pages of a website. Search engine crawl-ability depends mostly on the frequency of the updates made to the site.

If the site has only a few pages, the crawl budget isn’t a big deal.

What if the site has more than 10000 URLs to crawl?

Most of the eCommerce websites will have more pages to crawl (depends on the products added).

For example, though the crawler crawls only 500 URLs, it is SEO’s responsibility to direct the crawler to spend time only on crawling relevant pages and avoid crawling the scripts and unnecessary redirected pages.

It is possible by having a strategically planned sitemap and effectively implemented robots.txt file in association with meta-robot tags.

By doing this, you can avoid the crawl budget waste at its best.

Response Code & Temporary Redirects

With the log file analysis, you can find all the important HTTP status code for a website right from 200 to 500 codes.

Often crawler gets disturbed by server errors (5xx) and unnecessary temporary redirects (3xx) and leaves crawling. It is one of the primary reasons why it takes more than the estimated time for a website to get indexed by the search engine.

With the log file analysis for SEO, you can find the 302, 500, 404 erros to take necessary actions to improve crawling and other marketing functions.

Prioritize Your Important Pages for Crawling

By analyzing the log file, you will get information on what are the top pages crawlers often visit and the time spent on the same.

Based on this data, you can prioritize the URLs using a sitemap and help crawlers to crawl them based on the priority.

Last Crawl Date

The last crawl date is one of the most straightforward identifiable elements with the log file analysis for SEO. With this data, you can update the freshness of the content to attract the robots to crawl & index the updated page.

How to Analyse a Server Log File?

You might have this question in mind, is it possible to analyse log files manually?

My answer is Yes. It is quite possible with excel added with hours of manual data interpretation. Hence, unless you have enough time to complete your advanced SEO audit and no pressure from the developer or client, you can manually analyse the server log files for SEO. It is a hypothetical situation for any SEO in the world. In other words, it doesn’t exist.

But, as SEO analysts, we always go for the best practices.

Make the most of the log file analyser for auditing and managing the reports. It will eliminate hours of manual work and simplify the data segmentation for actionable outputs.

Must Read 👉 Top 10 reasons to try Dragon Metrics SEO Tool

List of Free Log File Analyser for SEO

There are ‘N’ number of premium and free open source versions of log file analyser available in the market for an advanced SEO audit.

Screaming Frog Log Analyser is my personal favorite free version server log analyser in the internet.

Some other FREE log file analyser is:

- Graylog

- Nagios

- Elastic Stack

- LOGalyze

- Fluentd

Suganthan Mohanadasan the Co-Founder and Technical SEO of Snippet.Digital has covered more insights on the log file analysis reports along with the Google Data Studio template. He also added issues faced by SEOs from clients and developers to get the log file through his survey.

Actionable Insights with Log File Analysis

After the complete analysis of log files, you will have the following bunch of actionable marketing insights for SEO campaigns.

- All robots behavior on your website

- Crawl volume & visit frequency of robots

- HTTP status codes

- Desktop vs. Mobile bots

- Most crawled pages on the website

- Pages omitted by robots

- Individual robots behavior on pages

- Time spent by robots on the server for downloading pages

- Crawl error reports with 404 & 500

- Average response time

Thus, a Log File analysis for SEO should be in your SEO Checklist for an advanced SEO audit and technical SEO optimization.

Now, it’s your turn to audit the Server Log file for SEO and have your comments.

I clearly understood. Great job man.

I m willing to learn SEO bcz it’s new trend to expand your business globally without spending too much extra.SEO doesnt bring results in an overnight,but takes couple of months to expand the business. If you will help me in this field, I will be very much thankful yours.

I must say this is an amazing and helpful article for readers. Thanks for describing this so easily and efficiently. Keep t up !!